Friendly Note: FreeJupiter.com shares general info for curious minds 🌟 Please fact-check all claims—and always check health matters with a professional 💙

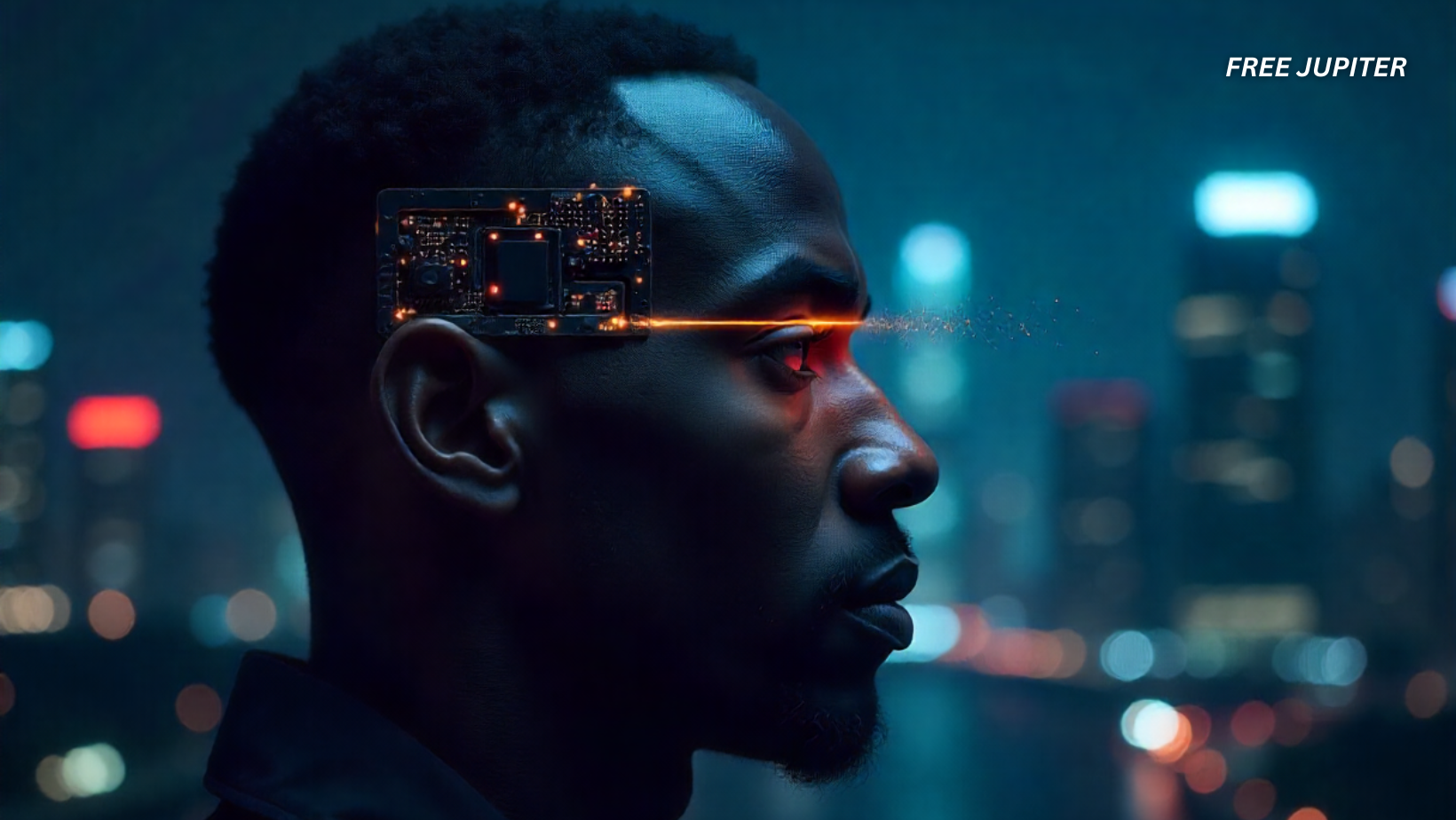

Imagine being able to send a text message, write an email, or tell someone how you feel without ever opening your mouth. It may sound like a plot from a futuristic movie, but researchers at Stanford University have brought this one step closer to reality.

They’ve been working on a brain–computer interface (BCI) that can “translate” silent thoughts into written words — and in tests, it was correct up to 74% of the time. While that’s not perfect, it’s a huge leap forward in the science of communication technology.

What Exactly Is a Brain–Computer Interface?

A brain–computer interface is a system that allows direct communication between the brain and a machine. It works by detecting the brain’s natural electrical activity and converting it into commands that a computer can understand.

Our brains are constantly producing tiny electrical signals as billions of neurons send messages to each other. A BCI captures these signals using special sensors, interprets their patterns, and then translates them into actions — like moving a prosthetic arm, controlling a cursor, or in this case, turning thoughts into text.

This is why BCIs are so promising for people with disabilities or speech impairments. They could provide a new way to interact with the world without relying on physical movement or spoken words.

Read more: People Are Just Finding Out What the ‘I’m Not a Robot’ Button Really Does

How the Stanford Experiment Worked

The Stanford team recruited four volunteers, each willing to have a small device implanted in the brain. The device contained microelectrodes — hair-thin wires that record brain activity. These were placed in the motor cortex, a part of the brain that helps control movement and speech.

The volunteers were given two types of tasks:

- Speaking out loud a set of words.

- Silently imagining themselves speaking those same words.

Interestingly, both actions activated some of the same brain regions. This meant that even without moving the mouth, the brain was still producing patterns that could be linked to speech.

Once these patterns were recorded, the researchers used artificial intelligence to “train” the system. The AI learned to associate certain brain activity patterns with certain words. Over time, it became better at guessing what participants were thinking — even when the words were never spoken.

The Accuracy Factor

In its best trials, the system correctly identified the imagined sentences nearly three-quarters of the time. For such an early-stage project, that’s impressive.

It’s worth noting that the system wasn’t simply “reading random thoughts” floating through a person’s mind. The participants had to be actively thinking about specific words from a predefined list.

A Password for Privacy

One of the clever touches in the experiment was a built-in security measure: a mental password. Before the system could start decoding silent speech, the participant had to think of a specific phrase.

The chosen phrase? “Chitty chitty bang bang.”

A bit whimsical, yes — but it worked. The AI recognised the password with 99% accuracy before it would “unlock” the thought-to-text translation.

This step is more than just a fun gimmick; it’s a safeguard to ensure the device can’t be activated without the user’s permission.

Why This Research Matters

For people who have lost the ability to speak — due to paralysis, neurological disorders like ALS, or severe injuries — this technology could one day be life-changing.

Current assistive devices often require slow, laborious input methods, like eye-tracking keyboards. A fluent, thought-based system could restore natural, back-and-forth conversation for people who haven’t spoken in years.

It could also be adapted for situations where speaking isn’t possible, such as communicating in noisy environments, underwater, or even in space missions where sound-based communication is impractical.

Read more: A Real-Life Pandora? Scientists Spot Possible Alien World Near Alpha Centauri A

The Challenges Ahead

While the thought-to-text brain chip feels like something ripped straight from a sci-fi novel, turning it into a practical everyday tool is a much bigger challenge than it sounds. The Stanford team’s early success is promising, but there are several hurdles to clear before this technology could be widely available.

1. Accuracy and Vocabulary Size

Right now, the system can correctly interpret imagined sentences about 74% of the time — which is impressive for such an early project, but not quite reliable enough for real-world use. Misreading a word here and there might be fine in casual conversation, but it could cause serious misunderstandings in medical settings, legal situations, or emergencies.

Also, the vocabulary the AI works with is still limited. Expanding it to recognise thousands of words — including slang, names, and regional expressions — will require far more data and training.

2. Invasiveness of the Technology

The current version requires a surgical implant, which means brain surgery. That’s a huge barrier for most people, especially those who don’t already need an operation for medical reasons. In the future, researchers hope to develop less invasive versions — perhaps using advanced external sensors or wearable headsets that can read brain activity without opening the skull. But that’s still a work in progress.

3. Training the AI for Real-World Conditions

Right now, the AI is trained under controlled lab conditions. The brain signals are recorded while the participant is sitting still, focused, and thinking about specific words. In real life, our thoughts are messy — we get distracted, we switch topics, we mix languages, and sometimes our inner speech is just… nonsense. A real-world BCI would need to sort through all that mental noise and still produce accurate results.

4. Privacy and Security Concerns

One of the biggest questions is: who gets access to your thoughts? The idea of a “mind-reading” machine raises serious ethical and legal concerns. What if someone hacked into it? Could thoughts be recorded without consent? The Stanford team’s password system (“Chitty chitty bang bang”) is a lighthearted start, but a robust privacy framework will be essential before BCIs can be used outside the lab.

5. Cost and Accessibility

Even if the technology works perfectly, it needs to be affordable and accessible. Cutting-edge medical devices often come with enormous price tags, which could put them out of reach for the people who need them most. Scaling production, simplifying the design, and integrating it into existing medical care systems will be key.

6. Social and Psychological Adaptation

Communicating through a brain chip would be a big adjustment for both the user and the people interacting with them. There may be a learning curve, and some people may feel uneasy about the idea of thought-based communication at first. Public understanding — and trust — will play a huge role in how widely this technology is accepted.

Read more: After Half a Century, Scientists Finally Catch The Elusive ‘Ghost Particles’

A Glimpse Into the Future

Frank Willett, a Stanford neurosurgery professor and one of the project leaders, believes this is just the beginning. “This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech,” he said.

If that day comes, people who have been locked inside their own minds could once again tell stories, express emotions, and share ideas — not with keyboards or voice synthesizers, but with pure thought.

And yes, perhaps their first words might still be “Chitty chitty bang bang.”